The Power of Retrieval-Augmented Generation (RAG) in LLMs

Introduction

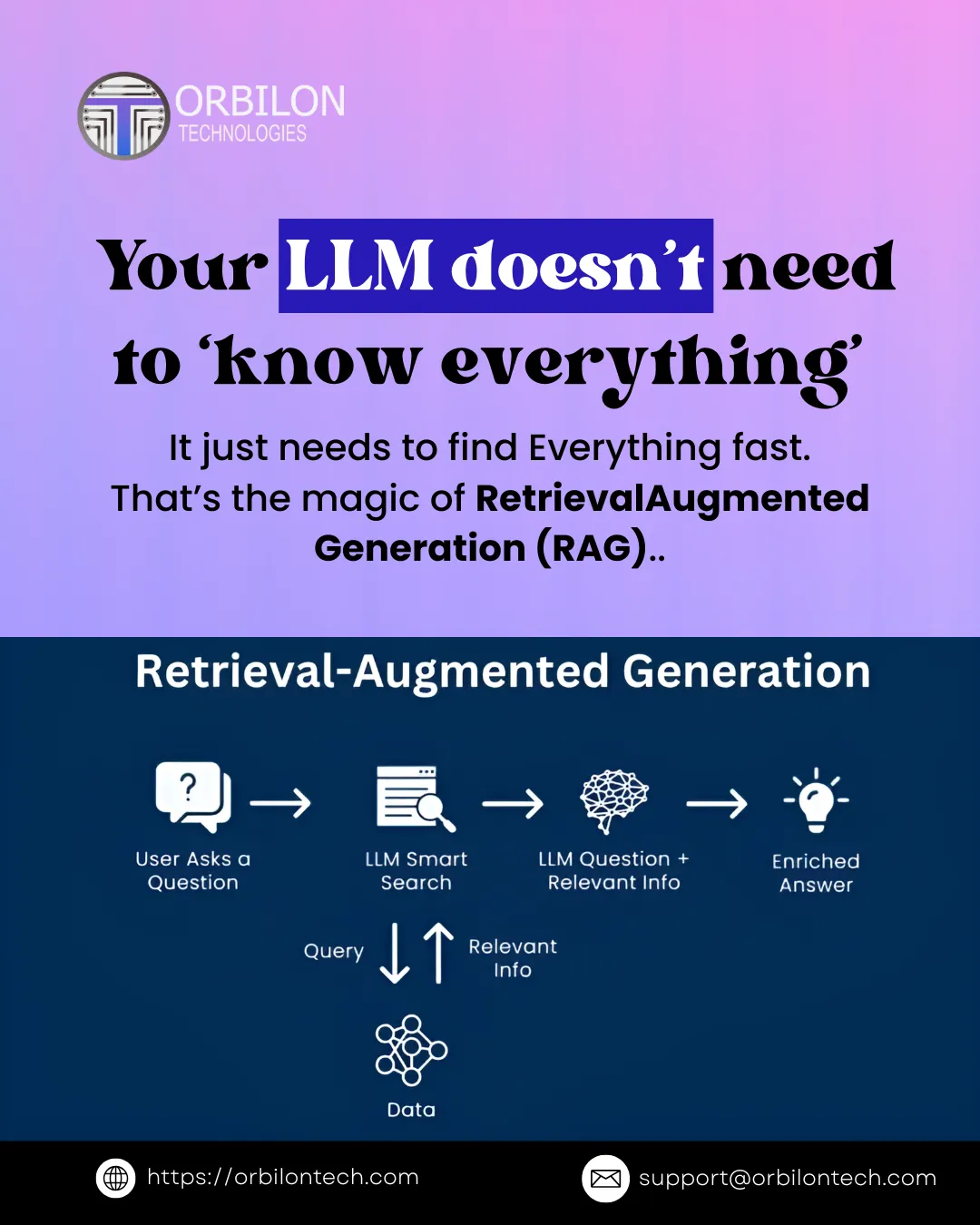

Massive language models (LLMs) are dramatically changing the way enterprises handle and interpret data in the case of the usage of artificial intelligence (AI) in the present-day world. But the fact is, your LLM is not required to “know everything.” What it requires is to locate everything very quickly. This is the amazing invention behind Retrieval-Augmented Generation (RAG), a technology that enhances AI in various aspects – in terms of speed, intelligence, and trustworthiness.

What is RAG (Retrieval-Augmented Generation)?

RAG (Retrieval-Augmented Generation) is a sophisticated AI method that merges the concepts of fetching information and generating text. Basically, an LLM powered by RAG is not limited to its pre-trained data anymore, as it can look up, fetch, and use the most relevant data for giving accurate and up-to-date responses. Thus, this hybrid model is innovative to the extent that it eliminates the difference between unchangeable training data and timely real-world data.

How RAG Works: A Simple Process

- User Asks a Question: The user puts a question to the LLM.

- LLM Smart Search: The model looks for the most up-to-date and relevant info in the connected databases, APIs, or documents.

- Query + Relevant Info: The LLM merges the fetched data with the information it already has.

- Enriched Answer: The LLM provides a detailed, accurate, and context-aware answer to the user.

With this method, LLMs can be considered as a real-time knowledge engine that keeps the output relevant to the latest changes in the information.

Why RAG is Essential for Businesses of the Future

In industries such as healthcare, finance, and technology, where data changes very fast, conventional AI models have difficulty keeping up with the latest developments. By using RAG, companies can:

- Increase precision by linking the interactions with up-to-the-minute data

- Except for fewer hallucinations due to less reliance on the outdated memory of the model

- Patronize user trust by providing data-supported and, hence, easily verifiable answers

- Besides, they can keep users free of mundane and time-consuming tasks by automating the perfect search and drafting of answers

In the end, RAG is what makes the difference in AI still functioning as a live assistant rather than a mere static knowledge base.

Real-World Applications of RAG

- Customer Support: LLMs get hold of the most recent product or policy details to give correct, up-to-date responses.

- Healthcare: AI gets the newest medical research before giving advice or insights.

- Research & Analytics: Departments have a chance to communicate with the system in a natural way and get on-the-spot, checked, citation-backed results.

- Enterprise Solutions: LLMs are able to securely interact with confidential databases so as to simplify and improve internal knowledge management.

Final Thoughts

Your LLM doesn’t need to be aware of everything – what it essentially needs to do is to locate everything quickly. This is essentially the magic behind Retrieval-Augmented Generation (RAG). With such a technology, companies have the means of upgrading AI from being merely a tool of the past to a dynamic one, thus making it a strong partner in the process of innovation.

Want to Hire Us?

Are you ready to turn your ideas into a reality? Hire Orbilon Technologies today and start working right away with qualified resources. We will take care of everything from design, development, security, quality assurance and deployment. We are just a click away.