AI Costs Dropped 280x

Introduction

Remember the time when artificial intelligence initiatives were put on hold due to being “too costly”? That story just got rewritten. The price structure of AI has changed dramatically and is, in fact, the main driver of a huge technological wave that is opening up new opportunities to companies of any size. The most recent AI Index Report published by Stanford University highlights that the costs of AI inference have dropped by a factor of 280 since November 2022. A project that would have cost your company $396, 000 a year is now operating at about $1, 392. Take some time to understand this. We are not talking about simply making a few more improvementsthis is a total overhaul of AI economics.

However, what the headlines are not revealing to you is this: even though the cost of AI per unit is going down drastically, those companies that are capable of exploiting this change are experiencing a decrease in their total operational costs by 30, 40%. The ones who don’t realize this? They are still wasting money on poorly implemented operations while their competitors are taking the lead and moving at high speed.

Why Are AI Costs Falling?

It is not the case that AI costs are dropping just because cloud computing has become cheaper. The reason why they are falling is that five major shifts are converging:

- Architectural Innovation (MoE Models): The new architectures, like Mixture of Experts (MoE), do not activate the entire model for the query, but only the parts of a model needed per query; thus, the waste of compute is reduced dramatically. It is said that models like DeepSeek R1 are 20 to 50 times cheaper than the traditional transformer models, just due to more intelligent design and not less.

- Open-Source Competition: An open model like Llama 3.x is a reason for a price war to be started. The inference costs went down from $60 per million tokens (2021) to $0.06, which is a 1, 000 times reduction within three years, through the usage of platforms like Together.ai.

- Better Hardware Choices: The situation is not about GPUs only anymore. For example, Google TPUs can give 4 times better cost-performance for inference. A company like Midjourney was able to reduce the inference cost by 65%, that is, from $2M to $700K per month, just by switching to TPUs.

- Software & Edge Optimization: With edge AI, the computation is done closer to the data sources; therefore, the latency can be reduced from hundreds of milliseconds to less than 15ms, and the cloud bandwidth costs can also be reduced by up to 82%. Major retailers with 1,000+ stores and implementing edge AI across them are the ones already benefiting from these gains.

- Quantization & Compression: Current methods can preserve around 99% accuracy while the original computation used is only 25%; thus, immediate, production-ready cost savings are possible.

Real-World AI Cost Reduction

Organizations are cutting costs at scale by deploying AI where it directly impacts operations:

- Manufacturing (Predictive Maintenance): An automotive manufacturer cut unplanned downtime by 41%, reduced maintenance costs by 36%, and saved $127M annually by using AI to predict failures, automate scheduling, and optimize parts ordering. Continuous learning drove 20% additional ROI each year.

- Customer Support (AI Chatbots): AI-driven support reduced cost per ticket from $12 to $0.80, handled 10 more inquiries, resolved 92% without humans, and improved customer satisfactioncutting overall support costs by 3040%.

- Supply Chain (Demand Forecasting): A global retailer reduced carrying costs by 25%, cut stockouts by 30%, saved $200K per large store annually, and reduced waste by 60% using AI, powered demand prediction, and automated procurement.

- Document Processing (Automation): An accounting firm reduced processing time from 20 hours to 2 hours per week, lowered errors from 8% to 0.4%, saved $40K annually, and improved client satisfaction by 25%, while uncovering errors humans missed.

Bottom line: AI cost reduction isnt theoreticalits coming from automation, prediction, and continuous learning embedded directly into core business workflows.

Industries Poised for the Biggest AI Gains

AI cost reduction has a broad effect, but some industries have an outsized impact:

- Healthcare & Biotech Documentation, and knowledge, work, heavy areas achieve 4050% time savings. AI takes over records, claims, and diagnostics. Omega Healthcare saved 15, 000 employee hours per month, thus delivering a 30% ROI.

- Financial Services AI handles a huge number of transactions and contracts. JPMorgan does in seconds what would take 360, 000 hours of lawyers’ work for contract review, whereas AI fraud detection is saving billions and also cutting false positives.

- Logistics & Transportation AI, powered routing and maintenance, led to 1015% cost savings. UPS achieved a 15% reduction in fuel consumption and also improved delivery times by using AI route optimization.

- E-commerce & Retail AI is real, time optimizing of inventory, pricing, and personalization that is both margin-boosting and competitive.

- Manufacturing Through predictive maintenance and AI vision, unplanned downtime is reduced by 2550%, and defects are caught at 99.9% accurac,y thus waste and rework are cut drastically.

The bottom line is: Industries characterized by large volumes of data, repetitive processes, and tight margins are the ones that benefit the most from AI, driven by cost reduction.

The Implementation Process: Getting It Right

Most organizations fail by focusing on tools instead of strategy. What actually works starts with a clear framework:

Phase 1: Strategic Assessment (Weeks 13)

- Identify High-Impact Areas: Target high-volume, manual, or rules-based processes. By applying the 80/20 rule, a small number of workflows usually account for most of the potential AI cost savings.

- Calculate True Total Cost of Ownership (TCO): Don’t limit the TCO analysis to software pricing. Consider integration, infrastructure, training, maintenance, and deployment time as well. A cheap tool with expensive customization is not a cost-win.

- Define Clear Metrics: Steer clear of vague goals. Establish measurable outcomes such as “reduce processing time by 60% while maintaining 99.5% accuracy. Get baselines upfront to prove ROI.

- Assess Organizational Readiness: Good quality data, stakeholder alignment, and executive sponsorship are important. 52% of AI failures are due to readiness issues, not technology.

Bottom line: AI success starts with thorough cost analysis, clear metrics, and organizational readiness rather than vendor selection.

Phase 2: Pilot Development (Weeks 4–10)

- Start Small, But Real: Choose a tightly focused use case that has straightforward business value. A pilot that processes 100 real invoices per week is much more impressive than a theoretical system designed for 10, 000.

- Use Existing Infrastructure: Deploy production-ready platforms such as AWS SageMaker, Google Vertex AI, or Azure ML to keep the upfront cost low and the validation fast. Deliver value first before you build custom stacks.

- Monitor from Day One: Do not only track accuracy, latency, cost per operation, and user satisfaction; business impact should also be quantified. Employ the dashboards that stakeholders actually look at to review these metrics.

- Design for Failure: Anticipate uncertainty, edge cases, and bad data in your planning. Set up confidence thresholds, human handoffs, and graceful fallbacks so that failures are recognizable and controlled.

Bottom line: Practical, measurable, and resilientnot overengineered experimentsare the pilots that succeed.

Phase 3: Optimization (Weeks 1116)

This phase is where AI cost reduction accelerates:

- Model Selection: Bigger isnt better. Smaller models like Llama 3.2 3B often work better than larger ones for a few tasks and at a fraction of the cost. Always test cost vs. performance with your real workloads.

- Prompt Engineering: Good prompts can reduce token usage by 4060% without quality loss. Few-shot learning, structured reasoning, and context caching are some of the techniques that can halve costs and keep the output quality the same.

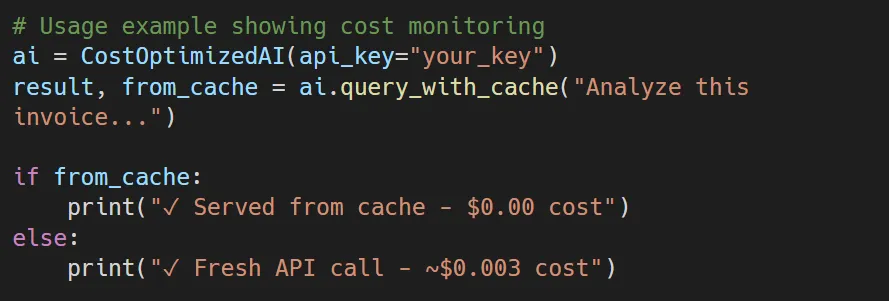

- Caching Strategies: Cache semantically for repeated or similar queries. Smart caching can cut API costs by up to 70% and keep the responses fresh.

- Batch Processing: If you don’t need the result immediately, batch jobs will give you 1020 efficiency gains. Do reports, summaries, and historical analysis during the off-peak hours to reduce the computing costs.

Bottom line: Optimization results from a smarter model choice, tighter prompts, caching, and batching rather than the addition of more compute.

Phase 4: Scale and Sustain (Week 17+)

- Gradual Rollout: Expand pilots that have demonstrated success, by step. Adhere to the 2550100 rule: at 25% level confirm, at 50% level refine, then at full level extendwhile monitoring cost, performance, and user satisfaction closely.

- Continuous Improvement: Design feedback loops to identify user corrections and edge cases. Properly managed AI systems, through continuous learning, become 1520% more efficient every year.

- Cost Governance: Implement spending alerts, set usage limits, and conduct regular cost reviews. Employ AI, focused FinOps practices, along with tools such as CloudZero or Kubecost for cost management at scale.

- Knowledge Transfer: Capture and share insights, train employees, and develop internal communities of practice. Companies that successfully scale AI become an in-house source of expertise rather than a long-term vendor dependency.

Technical Implementation: Code That Actually Works

For technical teams implementing AI cost reduction strategies, here are proven patterns:

Efficient API Usage Pattern

Model Selection Logic

Cost Monitoring Dashboard

Measuring AI ROI Metrics That Matter

AI cost reduction will only be successful if the right metrics are tracked in these four areas:

- Direct Cost Savings: Infrastructure spend, labor hours automated, error correction eliminated, and maintenance cost reduction.

- Efficiency Gains: Processing time reduction, throughput increase, shorter queue times, and better resource utilization.

- Quality Improvements: Lower error rates, higher accuracy, improved consistency, and stronger compliance.

- Business Impact: Customer satisfaction (NPS/CSAT), revenue gains from faster processing, competitive advantage, and innovation speed.

Bottom line: The best AI programs do not measure cost alone but cost, efficiency, quality, and business impact together.

Common AI Pitfalls and How to Avoid Them

- Premature Scaling: Three to five times higher costs result from scaling before optimization. Only scale pilots after optimization.

- Model Obsession: Teams excessively focus on model choice while neglecting data quality and clear requirements, which are significantly more important for most use cases.

- Ignoring Hidden Costs: while API fees are easy to spot, integration, maintenance, technical debt, and opportunity costs are less obvious. If “free” models require large teams to operate, then they are not really free.

- Lack of Governance: In the absence of cost controls and management, spending can increase rapidly. A single experiment that was not monitored resulted in a $45, 000 loss that went unnoticed.

- Underestimating Change Management: Only 30% of success is due to technology. The remaining 70% consists of adoption, process change, and readiness.

The Future: What's Coming Next

The Future of AI Cost Reduction will be much faster than expected:

- Inference chips specialized: New generation TPUs will be replaced by more efficient purpose-built processors, built processors which will lead to 5- 10 times cost reductions up to 24 months later.

- Model compression breakthroughs: Advanced quantization (2-bit and 1, bit models) will give 95%+ of the original model accuracy while the costs will be decreased by another factor of 10.

- Edge AI maturity: The scenarios where more and more processing is done at the edge will be the ones that now eliminate network costs and reduce latency to microseconds.

- Agentic AI: Autonomous agents will manage several models, they will be able to automatically select the cheapest model that satisfies the quality requirements for each task.

- Energy efficiency gains: The hardware changes that are causing about 40% yearly improvements in energy efficiency are indispensable as AI usage is scaling exponentially.

The next AI wave is not just smarter, but also dramatically cheaper, faster, and more energy-efficient.

Getting Started: Your AI Action Plan

- This Week: Review your existing AI budget and figure out which three processes are costing you the most.

- This Month: Work out the total addressable opportunity. What if the costs were reduced by 40%?

- This Quarter: Start a pilot program with a local high-volume, cost-effective process.

- This Year: Extend the successful pilots and develop in-house AI cost optimization skills.

Bottom line: The size of the budget does not determine the success of AI. It is about understanding the economics and making a move quickly.

Conclusion

The 280x fold reduction in AI inference costs is not just a number; it represents a fundamental reshaping of what is economically viable. Projects that were considered fantasies three years ago are now the norm. The question is not whether your organization will implement AI cost reduction strategies, but whether you will do it before or after your competitors.

The technology is mature. The tools are accessible. The ROI is proven. The only thing missing is a decisive action.

At Orbilon Technologies, we have facilitated cost reductions that have been dramatic in the scaling of AI capabilities in organizations across various industries. The time to act is now, when early movers still have the advantage of competition.

Want to Hire Us?

Are you ready to turn your ideas into a reality? Hire Orbilon Technologies today and start working right away with qualified resources. We will take care of everything from design, development, security, quality assurance and deployment. We are just a click away.